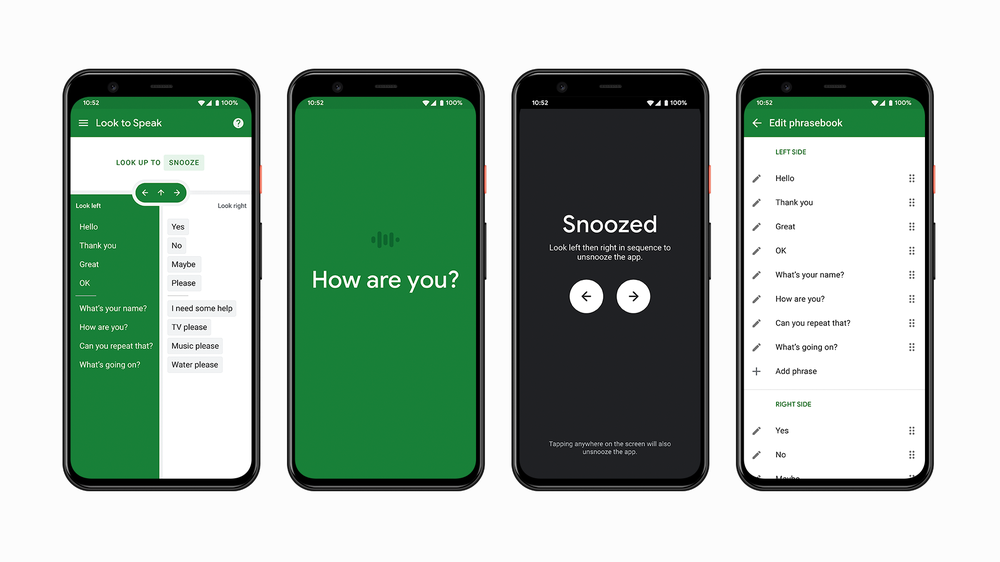

Look to Speak uses eye-gazing technology to let people select pre-written phrases to be spoken aloud.

By Aimee Chanthadavong Reprint from ZDNet: Innovation

Google, together with speech and language therapist Richard Cave, has developed an experimental Android app designed to provide people living with speech and motor impairments, particularly those who are non-verbal and require communication assistance, with a way to express themselves.

Look to Speak uses machine learning and eye gaze technology that lets people use their eyes to look left, right, or up to select from a list of phrases for their phone to speak out loud. The app can also be used to snooze the screen and edit a user’s phrase book.

Some of the phrases include: Hello, thank you, yes, and no.

“As mobile devices become more ubiquitous and powerful, with technologies like machine learning built right into them, I’ve thought about the ways phones can work alongside assistive technologies. Together, these tools can open up new possibilities — especially for people around the world who might now have access to this technology for the very first time,” Cave wrote in a blog post.

Cave believes Look to Speak could be used as an extension to existing eye gazing technology.

“We’re not replacing all of this kind of heavy-duty communication aid stuff because there’s a lot of functionality in there. Look to Speak is for those important short messages where the other communication device can’t go,” he said.